Every time a patient takes a new medication, there’s a quiet risk no one talks about: an unexpected reaction. It might be a rash, a spike in liver enzymes, or a sudden drop in blood pressure. These are adverse drug reactions-and they’re one of the leading causes of hospitalizations worldwide. Traditional ways of spotting them-paper forms, delayed reports, siloed data-just don’t cut it anymore. That’s where clinician portals and apps come in. They turn safety monitoring from a reactive chore into a real-time, data-driven process. But using them right? That’s where most teams struggle.

What Exactly Are Clinician Portals for Drug Safety?

These aren’t fancy dashboards for data nerds. They’re tools built into or connected to the systems doctors and nurses already use every day: electronic health records (EHRs), clinical trial platforms, pharmacy databases. Their job? To catch safety signals before they become outbreaks. Think of it like this: instead of waiting for a patient to come back with a problem or for a lab report to be manually filed, the system flags odd patterns automatically. A 72-year-old on a new blood thinner starts showing elevated creatinine levels. A cluster of three patients in the same ward develops unexplained dizziness after starting the same antibiotic. The portal notices. It doesn’t just alert you-it pulls up their full history, checks for drug interactions, compares it to global safety databases, and even suggests possible causes. The most common platforms today fall into three buckets: enterprise tools like Cloudbyz for clinical trials, hospital-focused apps like Wolters Kluwer’s Medi-Span, and open-source or donor-funded systems like PViMS used in low-resource settings. Each has different strengths, but they all share the same core function: turning scattered clinical data into actionable safety insights.How Do These Tools Actually Work?

They don’t magic up answers. They connect. Most modern portals use FHIR or HL7 standards to pull live data from EHRs, lab systems, and prescription records. When a patient’s lab result hits the system, the portal cross-references it with known drug safety profiles. If a pattern emerges-say, five patients on Drug X show similar kidney changes within 10 days-the system triggers a signal. Cloudbyz’s platform, for example, processes data in under 15 minutes from the moment a lab result is entered. That means a safety officer in a clinical trial center in Chicago can see a potential issue from a site in Nairobi before the end of their workday. The system doesn’t just show the numbers-it links them to the patient’s full clinical story: allergies, other meds, past reactions, even notes from the nurse about how the patient looked during their visit. For hospitals, tools like Medi-Span work inside the EHR. As a doctor types a prescription, the system pops up a warning: “This combination increases risk of bleeding by 3.2x based on 12,000 patient records.” It’s not a guess. It’s evidence pulled from real-world data. One hospital in Wisconsin reported 187 prevented adverse events in six months just from these alerts. But here’s the catch: these tools are only as good as the data feeding them. If your EHR doesn’t capture the right details-like whether the patient took the drug as prescribed, or if they had a recent infection-the portal will miss signals or flag false ones. That’s why 22% of automated alerts in 2023 turned out to be noise, according to the FDA.Choosing the Right Platform for Your Setting

You wouldn’t use a race car to haul firewood. Same goes for safety tools. If you’re running a clinical trial with hundreds of sites and complex data flows, Cloudbyz is a top choice. It integrates with CDISC standards (SDTM, ADaM), handles massive datasets, and cuts signal detection time by 40% compared to old-school databases. But it’s expensive-around $185,000 a year-and takes 6 to 12 weeks to set up. You need a team that knows data mapping and regulatory compliance. For hospitals, Wolters Kluwer’s Medi-Span dominates. It’s built into Epic and Cerner, works with existing workflows, and has the highest user satisfaction in U.S. hospitals over 500 beds. But it’s not perfect. Clinicians report “alert fatigue”-too many warnings, too many false alarms. One nurse in Minnesota said, “I’ve stopped looking at the pop-ups. They’re always about drugs I’d never prescribe.” That’s a real problem. If the system cries wolf too often, people stop listening. In places with limited internet, unstable power, or no IT staff-like rural clinics in Kenya or Laos-PViMS is the go-to. It’s free, runs on any browser, and uses simple drop-down menus instead of complex forms. A clinician in Uganda told me, “I used to spend two hours filling out paper forms. Now it takes 15 minutes, even on a slow phone.” But PViMS doesn’t have AI. It doesn’t predict. It just collects. That’s fine for basic reporting, but it won’t spot hidden patterns in complex cases. And then there’s clinDataReview, an open-source tool loved by regulatory teams. It’s built in R, generates FDA-compliant reports automatically, and is 99.8% accurate in detecting safety signals during testing. But you need to know how to code. If your safety officer isn’t comfortable with R scripts, this tool will sit unused.

What Skills Do You Actually Need to Use These Tools?

It’s not just about clicking buttons. Effective use requires three things:- Clinical pharmacology knowledge-You have to understand how drugs work in the body, what side effects are common, and which combinations are dangerous. No tool can replace this.

- Data literacy-Can you read a graph? Understand what a 95% confidence interval means? Know the difference between correlation and causation? If not, you’ll misinterpret alerts.

- Regulatory awareness-FDA 21 CFR Part 11, EMA guidelines, ICH E2B standards. These aren’t just paperwork. They’re legal requirements. If your reports aren’t traceable, auditable, and reproducible, you’re at risk.

Common Pitfalls and How to Avoid Them

Here’s what goes wrong-and how to fix it:- Alert fatigue: Too many false positives. Solution: Tune thresholds. Don’t just accept default settings. Work with your vendor to adjust sensitivity based on your patient population.

- Integration failure: The portal doesn’t talk to your EHR. Solution: Start with a pilot. Test with one drug, one department, one clinic. If it works there, scale.

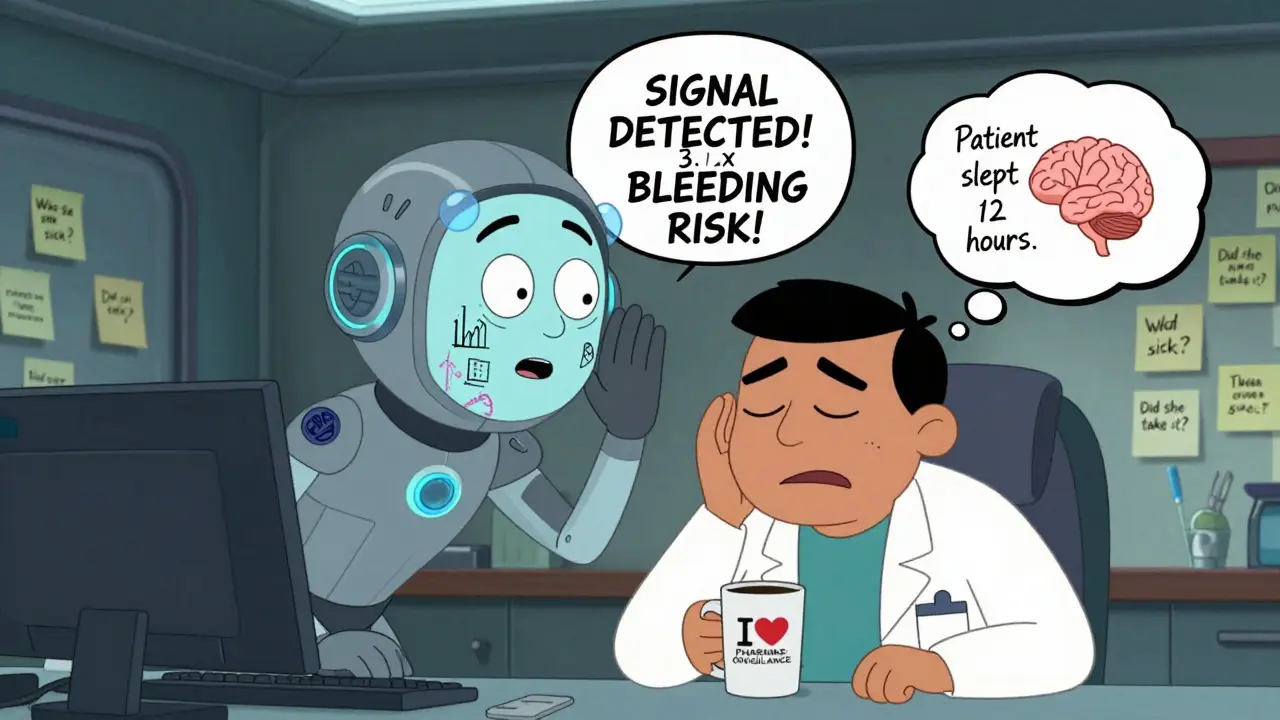

- Over-reliance on AI: Assuming the system knows better than the clinician. Solution: Always review alerts with clinical context. Ask: Did the patient take the drug? Did they have an infection? Are they on other meds? The algorithm doesn’t know.

- Ignoring unstructured data: Most safety signals hide in doctor’s notes. “Patient seemed unusually tired,” “Complained of dizziness after first dose.” Current systems only catch 65-78% of these. Train staff to flag these notes manually.

What’s Next? The Future of Drug Safety Monitoring

The next wave is AI that doesn’t just detect signals-it explains them. IQVIA’s new “AI co-pilot” shows safety officers not just that something’s wrong, but why. It pulls in similar cases, past literature, and even patient demographics to build a narrative. Early tests show it cuts validation time by 35%. The FDA is pushing for explainable AI. By 2026, any algorithm used in safety monitoring must be able to show its logic-no black boxes. That’s good. It means tools will become more reliable, even if adoption slows. Gartner predicts 80% of pharmacovigilance teams will use AI-augmented tools by 2027. But here’s the key: human oversight stays mandatory. The machine spots the pattern. The clinician decides if it matters. The real winners will be platforms that don’t just collect data-but fit into the workflow. If a tool adds steps, slows things down, or forces you to switch screens, it’ll be ignored. The best systems disappear into the background, like a seatbelt in a car. You don’t think about it… until you need it.Getting Started: Your First Steps

If you’re ready to start:- Identify your biggest safety gap. Is it delayed reporting? Missed interactions? Poor data quality?

- Map your current workflow. Where do safety reports get created? Who reviews them? How long does it take?

- Choose a pilot drug. Pick one with known risks or high usage in your setting.

- Start small. Use a free tool like PViMS or clinDataReview to test the process before investing in enterprise software.

- Train your team. Don’t just show them the buttons. Teach them how to think with the data.

Can clinician portals replace human safety reviewers?

No. Portals flag potential issues, but they can’t interpret context. A spike in liver enzymes might mean a drug reaction-or it might mean the patient had a viral infection last week. Only a trained clinician can make that call. AI supports, but doesn’t replace, human judgment.

Are these tools only for large hospitals or pharmaceutical companies?

No. While enterprise tools like Cloudbyz are expensive, simpler options exist. PViMS is free and used in over 28 low-resource countries. Even small clinics can use EHR-embedded tools like Medi-Span, which cost as little as $22,500 a year. The key is matching the tool to your size and needs.

How long does it take to implement a drug safety portal?

It varies. For hospital systems like Medi-Span, 4-6 weeks with staff training. For clinical trial platforms like Cloudbyz, 8-12 weeks due to complex data mapping. Free tools like PViMS can be up and running in 3-5 weeks, but depend on internet access and local support.

What’s the biggest mistake people make when using these tools?

Assuming the system is infallible. Automated alerts have false positives-sometimes as high as 22%. Ignoring clinical context, skipping manual reviews, or turning off alerts because they’re too noisy are all common errors that lead to missed signals or alert fatigue.

Do I need to be a tech expert to use these platforms?

Not for basic use. Most portals have intuitive interfaces. But to get the most out of them-tuning alerts, interpreting complex reports, troubleshooting integrations-you need staff with data literacy and clinical pharmacology training. Training typically takes 80-120 hours.

i just wish these portals would stop yelling at me every time someone takes ibuprofen. i get it, it's a risk, but half the alerts are for stuff we've seen a thousand times. it's exhausting. i just want to help patients, not play whack-a-mole with false alarms.

lol i love how people act like these tools are magic. they're not. they're just fancy spreadsheets with pop-ups. the real work is still in the notes, the conversations, the gut feeling you get when a patient says 'i just don't feel right'. no algorithm picks up on that. train your staff to listen, not just click.

you people are so naive. these portals are just surveillance tools disguised as safety. the pharma companies feed them data, the FDA looks the other way, and suddenly you're 'preventing' reactions by suppressing reports. it's all a money game. if you really cared about safety, you'd ban these drugs outright instead of making nurses chase ghosts.